9 Validated n8ked Options: Safer, Ad‑Free, Security-Focused Selections for 2026

These nine alternatives allow you generate AI-powered visuals and fully synthetic “artificial girls” while avoiding engaging non-consensual “automated undress” plus Deepnude-style features. Every choice is clean, privacy-first, plus both on-device and constructed on open policies fit for 2026.

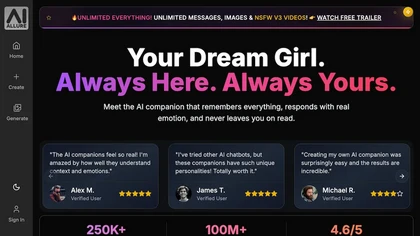

Users find “n8ked” and comparable nude apps searching for rapid results and authenticity, but the exchange is exposure: non-consensual fakes, dubious data collection, and unmarked content that circulate harm. The options below focus on authorization, on-device processing, and provenance so you may work innovatively without crossing lawful or principled lines.

How have we validate protected options?

We emphasized on-device creation, no advertisements, clear prohibitions on non-consensual media, and transparent personal retention policies. Where online models appear, they function within mature guidelines, monitoring records, and content credentials.

Our review centered on five main factors: whether the application operates locally with no telemetry, whether it’s clean, whether the application blocks or discourages “clothing removal tool” functionality, whether it supports content traceability or watermarking, and whether its terms forbids non-consensual explicit or deepfake use. The conclusion is a shortlist of practical, creator-grade choices that bypass the “online adult generator” pattern completely.

Which solutions qualify as advertisement-free and security-centric in 2026?

Local open-source packages and pro offline applications lead, because they reduce personal leakage and tracking. You’ll see Stable SD interfaces, 3D human creators, and professional tools that keep sensitive media on the user’s machine.

We removed nude tools, “girlfriend” manipulation builders, or solutions that transform dressed images into “realistic nude” content. Responsible creative processes center on generated models, licensed datasets, and signed permissions when real individuals are involved.

The 9 privacy-centric alternatives that truly work in 2026

Use these if you need control, quality, and security without touching an clothing removal tool. Each choice is functional, widely adopted, and doesn’t depend on false “automated undress” promises.

Automatic1111 SD Diffusion Web User Interface (Local)

A1111 is a very widely used local interface for SD Diffusion, giving you precise oversight while keeping all data on your computer. It’s ad-free, extensible, and includes high quality with protections users establish.

The User system runs on-device after setup, eliminating remote uploads and reducing data exposure. You may produce completely synthetic people, enhance base shots, or develop concept designs minus invoking any “clothing elimination tool” mechanics. Add-ons include ControlNet, inpainting, and upscaling, ainudezai.com and users decide which models to use, how to mark, and what to restrict. Conscientious artists limit themselves to synthetic people or media created with written permission.

ComfyUI (Node‑based Local Pipeline)

ComfyUI is a visual, node-driven workflow designer for SD Diffusion that’s perfect for advanced users who require reproducibility and privacy. It’s ad-free and operates locally.

You build end-to-end workflows for text-to-image, image-to-image, and complex control, then generate presets for consistent outcomes. Because it’s offline, sensitive data will not exit your drive, which is crucial if you work with consenting individuals under confidentiality agreements. ComfyUI’s visual view helps review exactly what the current tool is performing, supporting moral, traceable workflows with optional clear tags on output.

DiffusionBee (Mac, Offline Stable Diffusion XL)

DiffusionBee offers simple SDXL generation on macOS with no sign-up and without ads. It’s security-conscious by nature, since the tool runs completely on-device.

For artists who don’t want to manage installs or configuration files, this application is a clean entry method. It’s strong for generated portraits, design studies, and visual explorations that bypass any “AI undress” behavior. You are able to keep collections and prompts local, apply custom own safety filters, and export with data tags so team members know an image is AI-generated.

InvokeAI (Offline Diffusion Package)

InvokeAI is a refined local diffusion toolkit with an intuitive streamlined interface, advanced inpainting, and comprehensive model organization. It’s advertisement-free and suited toward commercial pipelines.

The project emphasizes user-friendliness and guardrails, which renders it a strong pick for studios that require consistent, moral outputs. You can produce synthetic models for adult producers who require explicit permissions and provenance, maintaining source data local. InvokeAI’s pipeline tools lend themselves to documented permission and content marking, crucial in 2026’s tightened policy environment.

Krita (Professional Digital Painting, Open Source)

Krita is not meant to be an artificial nude maker; it’s a advanced painting app that stays fully on-device and clean. It supplements diffusion tools for moral postwork and blending.

Use the app to edit, create over, or merge synthetic renders while storing assets confidential. Its drawing engines, colour management, and composition tools assist artists refine anatomy and lighting by hand, sidestepping the quick-and-dirty undress application mindset. When real people are part of the process, you can embed permissions and license info in file metadata and output with clear attributions.

Blender + MakeHuman Suite (3D Modeling Human Creation, On-Device)

Blender with Make Human allows you build virtual person forms on the workstation with zero commercials or online upload. It’s a consent-safe method to “AI characters” since individuals are entirely synthetic.

You may sculpt, pose, and render photoreal models and will not touch anyone’s real photo or representation. Texturing and shading pipelines in the tool produce superior fidelity while preserving privacy. For adult creators, this suite supports a entirely virtual process with explicit model rights and zero risk of non-consensual deepfake mixing.

DAZ Studio (Three-Dimensional Avatars, Free to Start)

DAZ Studio is a complete mature platform for developing realistic person figures and scenes locally. It’s complimentary to start, clean, and resource-based.

Creators use DAZ to build pose-accurate, completely synthetic environments that will not require any “AI undress” manipulation of living people. Asset rights are obvious, and generation happens on your own machine. It’s a viable alternative for those who want realism while avoiding legal exposure, and the platform pairs well with Krita or Photoshop for finish work.

Reallusion Character Generator + iClone (Pro 3D Modeling Humans)

Reallusion’s Character Creator with i-Clone is a enterprise-level suite for photorealistic digital people, motion, and facial capture. It’s local software with professional workflows.

Studios adopt this when they need lifelike results, change control, and transparent IP rights. You can build willing digital replicas from scratch or from approved scans, preserve provenance, and produce final images offline. It’s not a outfit removal application; it’s a system for creating and animating characters you entirely control.

Adobe Photo Editor with Firefly AI (Generative Fill + C2PA Standard)

Photoshop’s AI Fill via the Firefly system brings licensed, trackable AI to the familiar editor, with Content Credentials (content authentication) support. It’s subscription software with strong policy and traceability.

While the Firefly system blocks explicit NSFW inputs, it’s essential for ethical retouching, compositing synthetic characters, and outputting with cryptographically verifiable output credentials. If you collaborate, these credentials help following platforms and collaborators identify machine-processed work, preventing misuse and ensuring your pipeline compliant.

Side‑by‑side comparison

Each alternative listed focuses on offline control or developed guidelines. Zero are “undress apps,” and zero promote non-consensual fake behavior.

| Application | Type | Functions Local | Ads | Information Handling | Optimal For |

|---|---|---|---|---|---|

| Automatic1111 SD Web Interface | On-Device AI creator | Yes | No | Offline files, user-controlled models | Artificial portraits, editing |

| ComfyUI System | Visual node AI workflow | True | None | Local, reproducible graphs | Pro workflows, transparency |

| DiffusionBee App | Apple AI application | Affirmative | Zero | Fully on-device | Straightforward SDXL, no setup |

| InvokeAI Suite | On-Device diffusion collection | True | No | On-device models, projects | Commercial use, consistency |

| Krita Software | Digital painting | Yes | Zero | Local editing | Post-processing, blending |

| Blender Suite + MakeHuman Suite | 3D human building | Yes | Zero | Local assets, renders | Completely synthetic models |

| DAZ Studio Studio | 3D Modeling avatars | True | None | On-device scenes, authorized assets | Realistic posing/rendering |

| Reallusion CC + i-Clone | Professional 3D people/animation | Yes | Zero | On-device pipeline, enterprise options | Lifelike, movement |

| Photoshop + Firefly | Editor with artificial intelligence | True (local app) | No | Content Credentials (C2PA) | Ethical edits, provenance |

Is AI ‘undress’ material legal if all parties consent?

Consent is the basic floor, not the maximum: you also need age verification, a written model permission, and to respect likeness/publicity rights. Many regions also govern explicit content distribution, record‑keeping, and platform policies.

If one subject is below minor or is unable to consent, it’s against the law. Even for agreeing adults, platforms routinely block “AI undress” content and unauthorized deepfake impersonations. A protected route in this year is artificial avatars or obviously released productions, labeled with media credentials so downstream hosts can confirm provenance.

Little‑known yet verified details

First, the initial DeepNude application app was pulled in that year, however copies and “nude tool” clones remain via branches and messaging bots, commonly gathering submissions. Second, the C2PA framework for Media Authentication achieved extensive adoption in 2025-2026 among Adobe, technology companies, and major media outlets, allowing digital traceability for AI-edited content. Additionally, on-device generation sharply limits vulnerability security area for data exfiltration compared to browser-based tools that record inputs and submissions. Lastly, nearly all prominent online sites now clearly ban unauthorized adult deepfakes and take action more rapidly when complaints include hashes, timestamps, and provenance data.

How can you safeguard yourself against non‑consensual deepfakes?

Reduce high-quality public face images, add obvious watermarks, and turn on reverse image alerts for your name and appearance. If you find abuse, save URLs and time stamps, file takedowns with proof, and keep proof for law enforcement.

Ask photographers to distribute with Media Credentials so manipulations are more straightforward to detect by difference. Use privacy settings that block scraping, and prevent sending any intimate materials to untrusted “explicit AI applications” or “online nude generator” websites. If you’re a artist, establish a consent ledger and store copies of IDs, authorizations, and verifications that individuals are of legal age.

Concluding takeaways for 2026

If you’re attracted by an “AI undress” generator that promises any realistic adult image from a dressed photo, walk off. The safest route is synthetic, fully approved, or fully consented workflows that run on your device and leave a provenance history.

The nine solutions above provide quality without the surveillance, ads, or ethical landmines. Users keep control of inputs, users avoid harming real people, and you get stable, professional workflows that won’t break down when the next nude app gets banned.